Apr 01, 2025

Apr 01, 2025

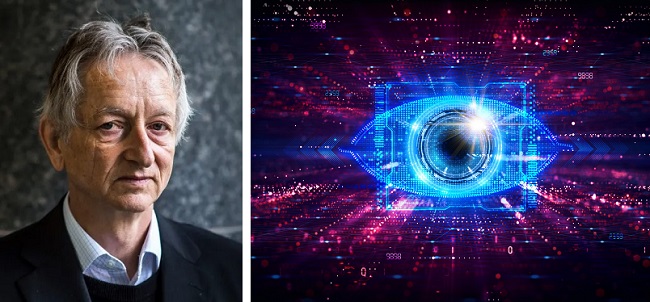

Geoffrey Hinton, a Canadian cognitive psychologist, and computer scientist, who, along with his two graduate students at the University of Toronto created technology — neural networks and deep learning— that became the intellectual foundation for AI systems, is known as the ‘father’ of artificial intelligence.

In AI it is the neural networks that function as a system similar to the human brain in learning and processing information. Just as it happens with a person, neural networks enable AIs to learn from experience, which is called deep learning.

Quitting his job at Google where he worked for more than a decade and joining the chorus of critics of generative AI, this scientist of AI now says: "Right now, what we're seeing is things like GPT-4 eclipses a person in the amount of general knowledge it has and it eclipses them by a long way. In terms of reasoning, it's not as good, but it does already do simple reasoning," and “given the rate of progress, we expect things to get better quite fast. So, we need to worry about that."

Hinton clarifies that the kind of intelligence we are developing is very different from the kind of intelligence we human beings have. For, there is a lot of difference between biological and digital intelligence. The big difference is: digital systems can have many copies of the same set of weights, the same model of the world. He further adds, “All these copies can learn separately but share their knowledge instantly. So, it's as if you had 10,000 people and whenever one person learned something, everybody automatically knew it. And that's how these chatbots can know so much more than any one person”.

Thus, right now, there are large potential benefits with AI, and at the same time, there are also largely unknown risks for society. And this is likely to occur much faster. For, Microsoft and Google are fiercely competing for first-mover advantage along with OpenAI, which is partly funded by Microsoft.

So, experts from the field of AI are of the opinion that “there is an enormous upside from this technology, but it’s essential that the world invests heavily and urgently in AI safety and control”. Even Sunder Pichai, CEO of Google reported to have said, “society must quickly adapt with regulations for AI in the economy, laws to punish abuse, and treaties among nations to make AI safe for the world”.

Now the big question is: How to control a technology that does not respect borders? Should we have a set of uniform global standards and practices? This, perhaps, will not happen. For, China, European Union, Brazil, etc., have already drafted their own unique legislation to regulate AI in their countries. China has indeed, “would require (generative AI) services to generate content that reflects the country’s socialist values”. But the US—the most advanced in AI research— is still to come up with a form of oversight.

It is obvious that such a fragmented approach cannot regulate AI, which has no sense of national boundaries. That aside, AI, being a unique technology, raises a complex set of challenges. For instance, we all know that algorithms, the real players behind AI, need lots of data for their training. It is the quality of data that ultimately defines the quality of AI. It is the set of databases on which AI learns that will determine how accurate and unbiased its output and advice will be. Now the question is: Are we to permit learning based on all the information available on the net—good and bad? Or, are we to ban some sources?

Over it, as much of the data for learning is sourced from the open web, there is an embedded risk. Sourcing of data from the open web makes AI highly vulnerable to cyber-attack in the form of ‘data poisoning’.

Data poisoning is nothing but modifying or adding extraneous information to a training data set so that the so-trained algorithm learns undesirable behaviour patterns. The objective behind such data poisoning by the attackers is to exploit the AI developed from such data to achieve their goal.

Data poisoning can be attempted/occur in multiple ways. For instance, an attacker may modify a small portion of data meant for training an algorithm or may inject a large volume of completely modified data. In either case, this causes the AI model to predict incorrectly, or take undesirable actions. And the crux of the whole problem is: like a real poison, poisoned data remains unnoticed until the damage becomes a reality.

It is perhaps keeping these challenges in mind Hinton appears more worried about the future of mankind: “It is hard to see how you can prevent the bad actors from using it [AI] for bad things”.

But the fact remains that the cat’s out… either we must adapt, or … …

Image (c) istock.com

21-May-2023

More by : Gollamudi Radha Krishna Murty