Apr 14, 2025

Apr 14, 2025

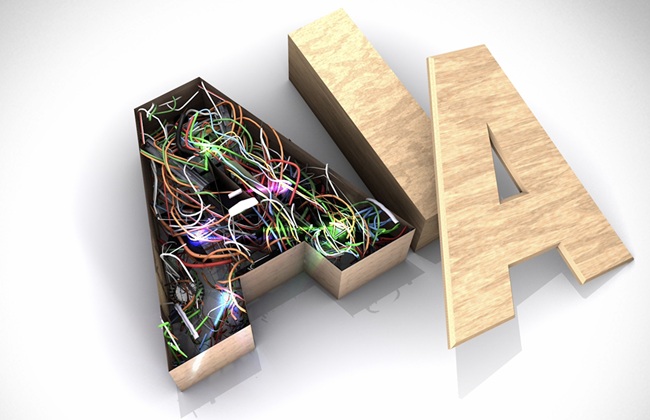

Can Innovation Survive Without Destroying the Planet?

Is AI’s Power Consumption the Silent Threat No One Wants to Talk About?

Artificial Intelligence (AI) is revolutionizing industries, reshaping economies, and redefining human capability. But beneath the surface of this digital marvel lies an inconvenient truth — AI is an energy guzzler of staggering proportions. How long can we ignore the fact that AI models consume as much electricity as entire nations? How sustainable is this relentless pursuit of progress when the very technology we celebrate could be accelerating an environmental crisis?

The AI energy paradox is real — its potential is limitless, but so are its energy demands. With AI models growing exponentially, their thirst for computational power is pushing global electricity consumption to unprecedented levels. And unless we act now, AI’s hunger for energy could become its greatest liability.

The Energy Hunger of AI: Why is it Consuming So Much Power?

1. AI Training: A Power-Hungry Process

Large-scale AI models, like OpenAI’s GPT-4 and Google's Gemini, require immense computational resources to process vast datasets. Training a single AI model can consume more electricity than an entire city does in a year.

Fact Check: If NVIDIA ships 1.5 million AI servers per year by 2027, they will collectively consume 85.4 terawatt-hours (TWh) of electricity annually — more than the yearly electricity usage of many small countries.

Example: In 2019, training GPT-3 required 1,287 megawatt-hours (MWh) of electricity — equivalent to the energy used by 126 U.S. homes for an entire year. And with models getting bigger, the numbers are only increasing.

2. The “Inference” Problem: AI Doesn’t Stop Consuming Energy

Even after training, AI systems continue to drain power through inference — the process of generating responses, making decisions, and processing user inputs.

Fact Check: A single ChatGPT query consumes 10 times more energy than a traditional Google search. If Google replaced its entire search engine with AI-driven responses, its annual energy consumption would rise to 29.3 TWh — comparable to the total electricity usage of Ireland.

Example: Every time you ask ChatGPT a question, you are unknowingly contributing to an energy-intensive process that spans multiple data centers worldwide. Multiply that by millions of users, and the problem becomes glaringly obvious.

3. The Hardware Bottleneck: The “Memory Wall” Problem

A significant amount of energy in AI computation is wasted in the simple act of moving data between processing and memory units. This inefficiency — known as the “memory wall” problem — adds unnecessary strain on power grids.

Fact Check: AI-driven data centers are projected to consume 390 TWh of electricity annually in the U.S. alone by 2030, representing 7.5% of the nation’s total electricity demand.

Example: Tech giants are scrambling for solutions, but traditional GPUs, while powerful, may not offer the efficiency improvements needed. New hardware architectures are being explored to tackle this issue at its root.

The Environmental & Economic Fallout of AI’s Energy Addiction

1. The Climate Crisis: Is AI Compromising Sustainability Goals?

Tech companies have pledged carbon neutrality, but AI’s energy consumption is pushing them in the opposite direction.

Fact Check: Google’s emissions have surged 48% over the past five years due to increased AI development. Microsoft’s emissions rose 30% since 2020, complicating its net-zero ambitions. If current data center expansion continues, global AI energy consumption could double to over 1,000 TWh by 2026, surpassing the total electricity usage of Japan.

2. The Cost Barrier: Will AI Become a Luxury for the Wealthy?

AI is expensive — not just in development, but in energy costs. The increasing price of electricity could turn AI into a “rich man’s tool”, limiting access to only those who can afford its immense power needs.

Fact Check: Roy Schwartz, a computer scientist at Hebrew University, warns that electricity costs could widen the AI accessibility gap, leaving small businesses and developing nations behind.

Example: Some AI companies are relocating data centers to areas with cheaper electricity, even if those areas rely on fossil fuels — defeating the purpose of sustainability.

Solutions: How Can We Curb AI’s Energy Crisis?

Short-Term Fixes: Optimizing Existing Infrastructure

1. Smarter Data Centers: AI Managing AI

AI can be part of the solution by optimizing its own energy use.

2. Rethinking AI Models: Doing More with Less

3. Aligning AI Workloads with Renewable Energy Availability

Long-Term Breakthroughs: Rethinking Hardware & Power Sources

1. In-Memory Computing: Reducing Energy Waste at the Source

2. Photonic Computing: Harnessing Light Instead of Electricity

3. The Nuclear Option: Is It the Future of AI Power?

Challenges

Final Thoughts: Can AI & Sustainability Coexist?

The AI energy crisis is not just an engineering problem — it is a global challenge that requires innovation, regulation, and responsibility. AI’s potential is limitless, but without sustainable energy solutions, we risk turning technological progress into an environmental catastrophe.

Will we act now to ensure AI remains an asset rather than a liability?

Will AI companies prioritize efficiency, or will they continue on a path of unchecked power consumption?

The future of AI is not just about what it can do — it’s about whether we can afford the energy to let it thrive.

The question remains: Will AI be the key to our progress, or the reason for our planet’s downfall?

Image (c) istock.com

15-Mar-2025

More by : P. Mohan Chandran